Artificial intelligence (AI) has become commonplace in our daily lives, with AI assistants on smartphones and search engines powered by AI. AI systems often rely on artificial neural networks (ANNs), which are inspired by the structure of the human brain. However, like the brain, ANNs can be susceptible to confusion and manipulation, either accidentally or intentionally. Adversarial attacks can deceive ANNs by exploiting vulnerabilities in their input layers, leading to incorrect decisions and potentially harmful consequences. This vulnerability is evident in various applications, including image classification systems, medical diagnostics, and driverless cars.

Unlike humans, ANNs may misinterpret visual inputs that appear normal or understandable to us. For example, an image-classifying system could mistake a cat for a dog, or a driverless car could misinterpret a stop signal as a right-of-way sign. Analyzing the cause of a mistake in an AI system is not always straightforward, especially when inputs are not visual. Attackers can take advantage of this by subtly altering input patterns to trick the ANN into misclassification or incorrect decision-making.

Various defense techniques have been developed to protect ANNs from adversarial attacks, but they often have limitations. One common approach is to introduce noise into the input layer to enhance adaptability to different inputs. However, this method may not always be effective and can be easily overcome by advanced attacks. Researchers Jumpei Ukita and Professor Kenichi Ohki from the University of Tokyo Graduate School of Medicine recognized these limitations and sought to develop a more robust defense strategy.

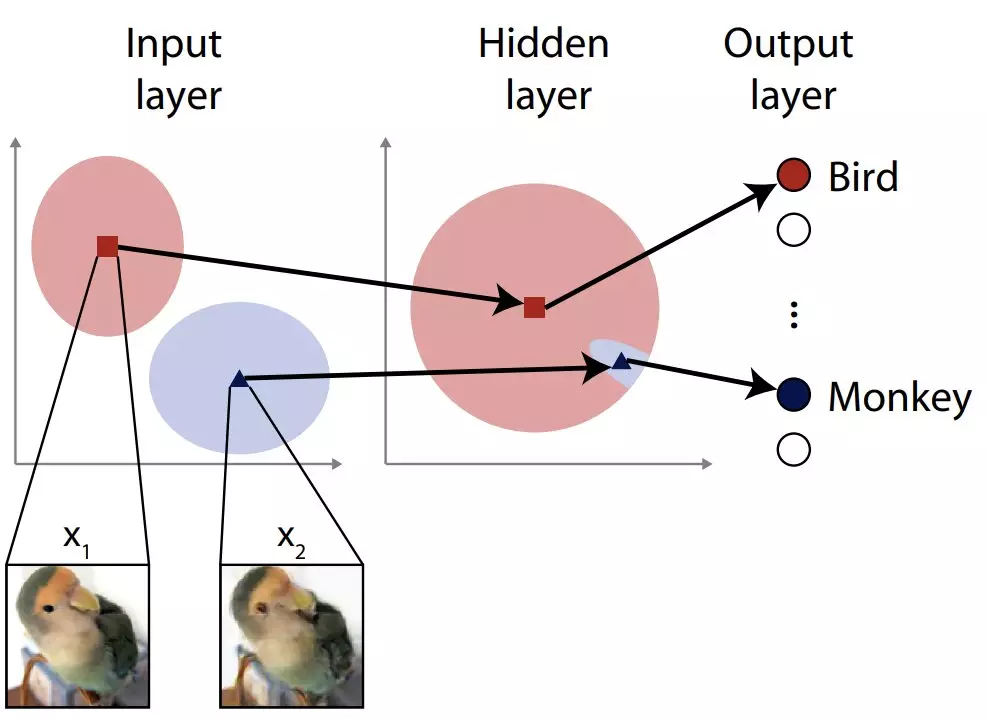

Taking inspiration from their expertise in studying the human brain, Ukita and Ohki devised a novel defense approach that goes beyond the traditional input layer. They introduced noise not only to the input layer but also to deeper layers of the ANN. This approach was initially avoided due to concerns about compromising the network’s performance under normal conditions. However, the researchers discovered that adding noise to deeper layers actually enhanced the ANN’s adaptability and reduced its susceptibility to adversarial attacks.

To test the effectiveness of their approach, Ukita and Ohki created hypothetical attacks that targeted layers deeper than the input layer. These attacks, known as feature-space adversarial examples, deliberately presented misleading artifacts to the deeper layers of the ANN. By injecting random noise into these hidden layers, the researchers observed that the ANN demonstrated increased adaptability and improved defensive capability against the simulated attacks. This breakthrough provides a promising foundation for enhancing the resilience of ANNs.

While the researchers’ approach proves robust against the specific type of attack they tested, they acknowledge the need for further development. They aim to enhance the effectiveness of the defense strategy against anticipated attacks and explore its applicability to other forms of attacks. Additionally, Ukita and Ohki anticipate future attackers devising methods to evade the feature-space noise defense, prompting ongoing research to stay one step ahead of potential adversarial tactics.

Artificial neural networks are integral to the functioning of AI systems, but their vulnerability to adversarial attacks poses significant risks. The innovative defense approach proposed by Ukita and Ohki addresses the limitations of existing techniques by augmenting the inner layers of ANNs with random noise. This novel strategy improves the network’s resilience and adaptability, mitigating the impact of adversarial attacks. However, ongoing research and continuous development are crucial to stay ahead in the arms race between attackers and defenders in the realm of AI. By enhancing ANNs’ ability to withstand adversarial attacks, we move closer to harnessing the full potential of AI technology for the benefit of society.

Leave a Reply